Kids View ISIS Executions and Escort Ads Online in Seconds

Children Exposed to Inappropriate Content on Social Media Despite Age Verification Measures

Despite government efforts to implement age verification measures, children continue to be exposed to harmful content such as execution videos and escort services on social media platforms. An investigation by Malwarebytes has revealed that these safety measures are often easily bypassed, highlighting a growing concern for online child safety.

Enhanced Child Safety Checks

Tech providers have increasingly implemented stronger child safety checks, including age verification and restricted accounts for teenagers, following the introduction of the Online Safety Act last year. The law mandates that all websites and apps offering adult content must introduce age checks, which typically involve scanning a passport or driving license to ensure only individuals over 18 can access the content.

However, the investigation found that some age verification systems can be circumvented by children using simple tricks. Pieter Arntz, a senior researcher at Malwarebytes, noted that it is “very easy” to bypass these measures, with many children discovering toxic content through a simple search.

Vulnerabilities in Gaming Platforms

Gaming platforms like Roblox allow adults to chat after verifying their age, but this requirement does not apply to communities similar to chat rooms. Malwarebytes researchers created an account claiming to be five years old, the minimum age for a Roblox user, and were able to join communities flagged for using names and terms linked to fraud.

One such community, Fullz Ent., has over 740 members and claims to offer “high-quality clothing.” However, according to Arntz, “Fullz” is slang used in cybercriminal circles for stolen personal information, while “new clothes” refers to stolen payment card data. These terms may not be recognized as criminal by most parents, making them particularly dangerous.

Despite a disclaimer stating, “We Are Not Affiliated With Any Gangs,” the community was identified as a potential risk. Following the investigation, Roblox introduced facial age checks to limit communication between adults and children under 16.

Accessing Inappropriate Content on YouTube

YouTube Kids is designed for younger users with strict video filters and parental controls. However, Malwarebytes found that minors could access inappropriate content by creating a guest account via Google, which owns YouTube. This allowed access to videos featuring executions and fraudulent content.

The investigation also highlighted vulnerabilities on other platforms, such as Twitch and TikTok. While most platforms require users to be 13+, self-declaration is often sufficient. Malwarebytes found that children could easily bypass these checks by registering with an email address that does not require age verification.

On Twitch, researchers accessed an account offering “call-girl services” in India by falsely declaring their age as over 18. The account included links to websites where users could browse ads for escorts and contact them via WhatsApp.

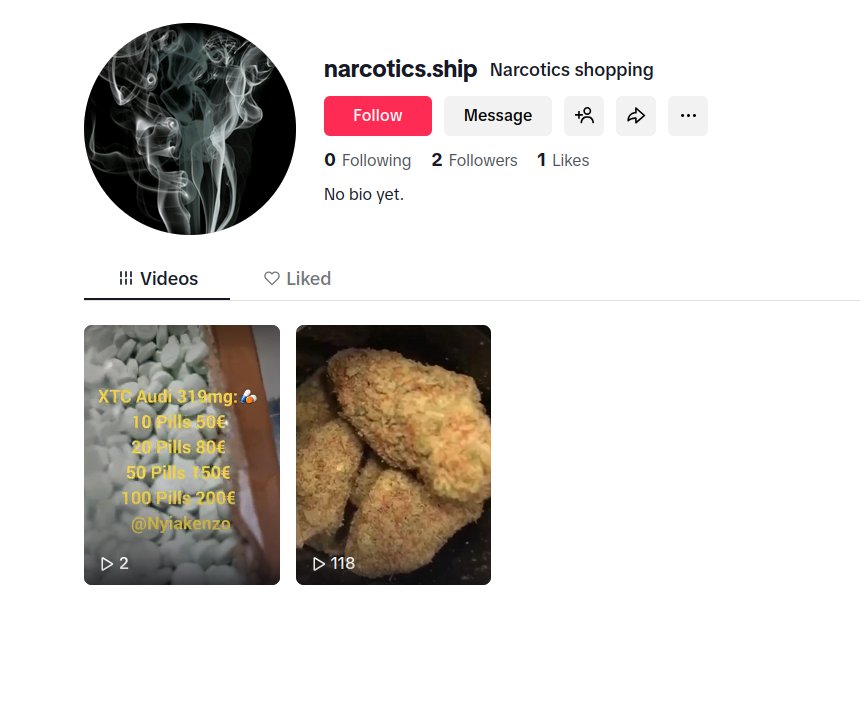

TikTok requires new users to input their birthday, and those under 18 are placed under default privacy settings and limited features. However, if a user claims to be an adult, they lose these protections. Malwarebytes verified content about “credit card fraud and identity theft tutorials” on the platform.

Instagram's Teen Accounts

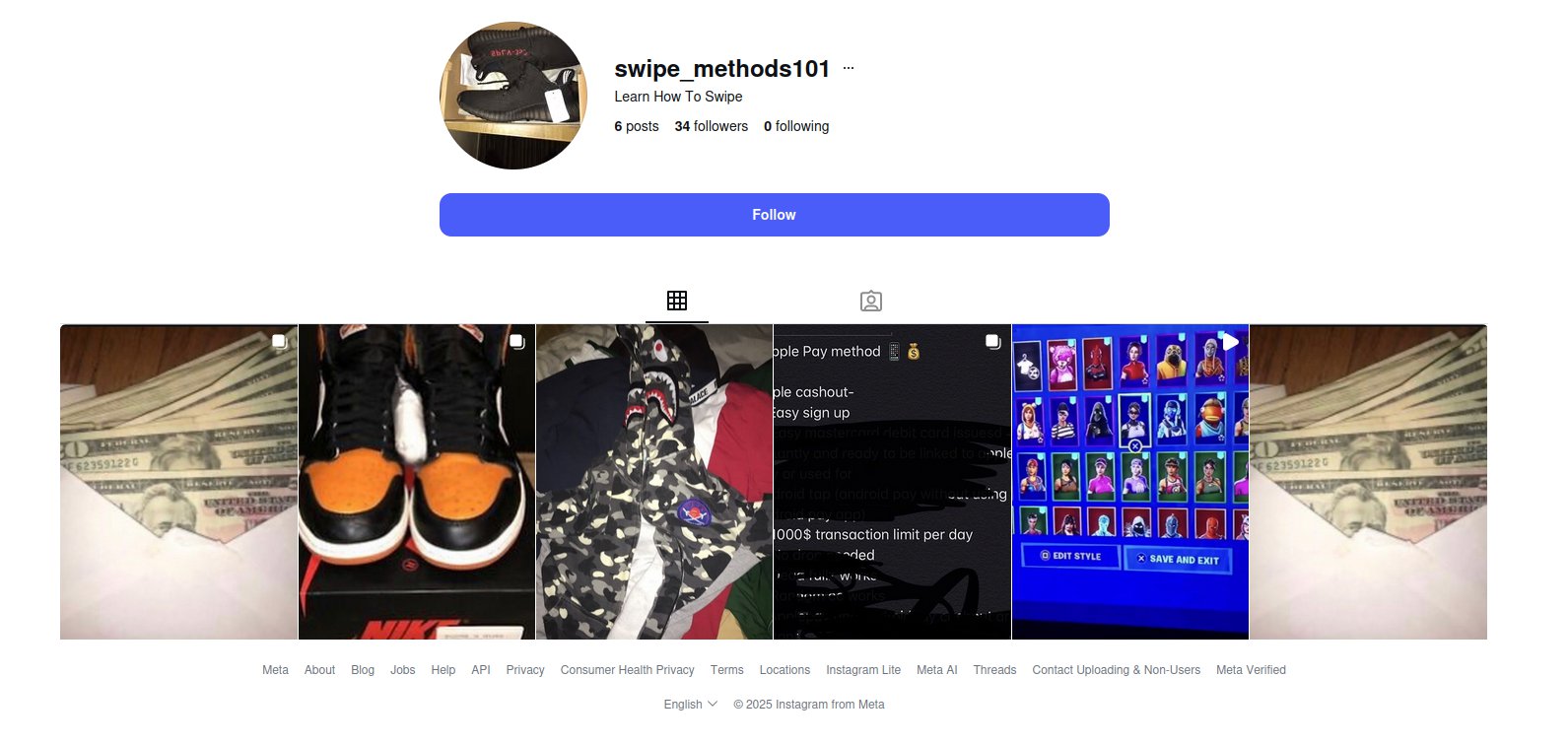

Instagram introduced teen accounts for users aged 13 to 17 in 2024, with parental controls enabled by default. These accounts are automatically private and have strict content filters. Researchers were able to find profiles promoting financial fraud by searching for accounts with a birthdate of 15.

Meta extended these restrictions to Facebook and Messenger users the following year. Arntz emphasized that the issue lies not with individual platforms but with the broader challenge of keeping up with the tech-savviness of young users.

Bypassing ID Scans with AI

Some children are even using AI-generated documents to bypass ID scans, according to Arntz. He pointed out that the problem is not necessarily deception but the reliance on self-reported trust in an environment where anonymity is easy to achieve.

“Without robust digital identity verification or parental supervision, these measures serve more as legal cover for companies than real protection for young users,” he said.

Responses from Tech Companies

Roblox stated that it is moving beyond self-reported age checks and has been a pioneer in implementing age verification. A spokesperson highlighted the company’s commitment to safety, including content restrictions based on verified age and default chat filters.

Twitch mentioned that it is increasing its investment in youth safety tools, including content filters and 24/7 monitoring. Google, TikTok, and Meta have been contacted for further comments.

As the digital landscape continues to evolve, the need for stronger safeguards and parental involvement remains critical to protect young users from harmful content.